Today, Google unveiled its new Pixel 6 and Pixel 6 Pro smartphones. In addition to a new custom-chipset and other hardware improvements, Google has added an array of AI-powered photo features that make the most of the two- and three-camera systems inside the Google Pixel 6 and Pixel 6 Pro, respectively.

Before getting into the new photo features though, let’s take a look at the camera hardware inside the Pixel 6 and Pixel 6 Pro. The wide camera module inside both devices uses a 50MP 1/1. 31” sensor (1. 2µm pixels) and offers a 26mm full-frame equivalent focal length with an F1. 85 aperture. The ultrawide camera module inside both devices features a 12MP sensor (1. 25µm pixels) and offers a 114-degree field of view with an F2. 2 aperture. The 4x telephoto camera module, which is exclusive to the larger Pixel 6 Pro, uses a 48MP 1/2” image sensor (0. 8µm pixels) and offers a 104mm full-frame equiv. focal length with an F3. 5 aperture.

These camera modules are housed inside what Google refers to as the ‘camera bar,’ a large black protrusion that spans the width of the rear of both smartphones. While this particular design might make for a larger camera ‘bump’ than most other smartphones, it should also prevent the Pixel 6 and Pixel 6 Pro devices from wobbling on a table when you set it down, as is the case with most other flagship devices with larger camera arrays.

For video, the Pixel 6 and 6 Pro can capture HDR video at up to 4K60p using its HDRnet technology to pull the most dynamic range possible from the sensors. It also offers 240 frames per second recording at 1080p.

As we’ve come to expect from Google, the camera hardware is only a small part of the equation. Google has also added an expansive array of AI-powered photo features, aided by the custom Tensor chipset inside the Pixel 6 and Pixel 6 Pro devices.

The first feature is Magic Eraser. As the name suggests, this tool is a way to remove unwanted elements of an image, such as a person in the background or a distracting sign in the foreground. But, unlike the Healing Brush tool we’re used to seeing in Adobe Photoshop and other post-production programs, Magic Eraser takes it a step further by automatically detecting what it deems as distracting elements and suggesting them to users for removal.

Before using the Magic Eraser tool.

After using the Magic Eraser tool.

Even if it doesn’t automatically detect an element you want to remove, you can simply tap on the unwanted element and Magic Eraser will get rid of it, filling in the space using AI-powered fill technology. This works with new images, as well as images from the past, as well as those captured in third-party camera apps.

The next AI-powered camera feature is Face Unblur. You know when you’re trying to get a photo of your child while they’re running around inside, only to realize their face is blurry because they were moving in less-than-ideal lighting scenarios? Well, Google’s Face Unblur feature hopes to end those blurry shots by taking two photographs at once and intelligently combining them.

How it works is that the main camera inside the Pixel 6 devices will capture the standard image to get the best exposure possible while the ultrawide camera will capture an image at the exact same time with a faster shutter speed to better freeze the subject’s face. Behind the scenes, Google’s software will use four machine-learning models to take the sharp face from the ultrawide camera and mask it on top of the less-noisy image from the wide camera to create a composited image where the most important parts are sharp and in focus.

On the opposite end of the spectrum, Google has also developed Motion Mode, another AI-powered photo mode that can intelligently detect what elements and subjects in an image should be sharp while other components be blurred through artificial means. Google didn’t elaborate on what particular types of shots this will work on, but did show examples of blurring the motion of a subway behind the subject of a portrait, blurring the water movement of a waterfall and adding motion blur to photos of athletes quickly moving.

The next major AI improvement is less about a particular shooting mode or style and more about the underlying image processing that has, historically, been overlooked by camera manufacturers. It's called Real Tone and its purpose, in Google's words, is to 'highlight the nuances of all skin tones equally. '

The automated features in many image processing pipelines has historically been skewed towards lighter skin, many times due to the image sets companies use to train their machine-learning algorithms, which can oftentimes feature photographs with predominantly light-skinned subjects. To make a more equitable image processing pipeline, Google worked with various black, Indigenous and people of color (BIPOC) photographers to capture thousands of images to create a image set that Google says is 25 times more diverse than its previous dataset. This dataset was then used to train the new capture and processing workflow in Google's Pixel 6 and Pixel 6 Pro devices.

The result is an image processing pipeline that better represents a more diverse range of skin tones, as well as a better capture experience that more accurately detects faces of BIPOC individuals and improves both auto white balance and auto exposure when capturing photographs of BIPOC subjects.

The last camera feature Google touted is a new partnership with Snap (the parent company of Snapchat), which will allow Snap users to instantly summon the Snap app from the lock screen for instant capture. Once unlocked, the images can then be edited with Snapchat's full array of AR lenses and features, including an upcoming live transcription feature.

With the camera technology out of the way, let's look at the displays on the front of the devices. The Pixel 6 features a 6. 4” 90Hz AMOLED display (1080 x 2400 pixels, 20:9 ratio, approx. 411 ppi density), while the Pixel 6 Pro features a 6. 71” 10-120Hz LTPO AMOLED display (1440 x 3120 pixels, 19. 5:9 ratio, approx. 512 ppi density) with HDR10+ support. Both screens are covered by Corning Gorilla Glass Victus and while both screens are slightly beveled around the edges, the Pixel 6 Pro’s screen does curve slightly on the vertical sides.

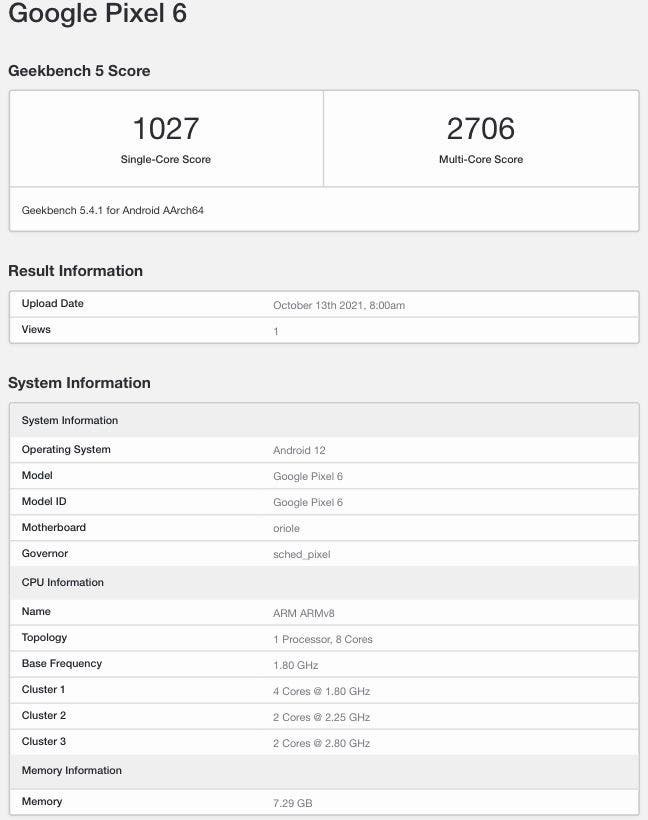

Powering the devices is a custom-designed ARM system on a chip (SoC) that Google is calling ‘Tensor. ’ Google directly compared this chipset to Qualcomm’s Snapdragon 888 chipset, which is inside an array of current Android flagship devices. The chipset features two high-power cores, two mid-range cores, four efficiency cores and an array of use-specific cores for security, image processing and more. The Pixel 6 includes 8GB of RAM while the 6 Pro includes 12GB.

An illustration of Google's new 'Tensor' chipset.

On the communications front, both devices feature Wi-Fi (802. 11 a/b/g/n/ac/6e), BlueTooth 5. 2, GPS, NFC and have a single USB-C port on the bottom. The battery inside the Pixel 6 is a 4614 mAh Li-Po battery, while the Pixel 6 Pro gets a slightly larger 5003 mAh Li-Po battery.

Other features are aligned with recent Android flagships, including wireless Qi charging (as well as reverse charging), an IP86 rating, in-screen fingerpritn readers, stereo speakers and fast charging up to 30W.

The Pixel 6 starts at $599 for the 128GB model and is available to order starting today in Sorta Seafoam, Kinda Coral and Stormy Black colorways. The Pixel 6 Pro starts at $899 for the 128GB model and is available to order starting today in Cloudy White, Sorta Sunny and Stormy Black colorways.

. dpreview.com2021-10-20 22:08